Computing Science Seminars, Autumn 2014

The Division of Computing Science and Mathematics presents the following seminars. Unless otherwise stated, seminars will take place in Room 2A73 of the Cottrell Building, University of Stirling from 15.00 to 16.00 on Friday afternoons during semester time. For instructions on how to get to the University, please look at the following routes.

Autumn 2014

| Date | Presenter | Title/Abstract |

|---|---|---|

| Friday 26 Sep |

Prof

John McCall Professor of Computer Science Director of IDEAS Research Institute Robert Gordon University of Aberdeen |

Telling the Wood from the

Trees: Essential Structure in Model-based Search.

Problem structure, or linkage, refers to the interaction

between variables in an optimisation problem. Discovering

structure is a feature of a range of search algorithms

that use structural models at each iteration to determine

the trajectory of the search. Examples include Information

Geometry Optimisation (IGO), Covariance Matrix Adaptation

Evolution Strategy (CMA-ES), Bayesian Evolutionary

Learning (BEL) and Estimation of Distribution Algorithms

(EDA) In particular, EDAs use probabilistic graphical models to represent structure learned from evaluated solutions. Various EDA approaches using trees and graphs have been developed and evaluated on a range of benchmarks. Benchmarks typically have "known problem structure" determined a priori. In practice, the relationship between the problem structure, structures found by by the EDA and algorithm success is a complex one. This talk will explore these ideas using a classification of problems based on monotonicity invariance. We completely classify all functions on 3 bits and show that conventional concepts of the relationship between structure and problem difficulty are too brittle to capture the subtlety even of this low dimensional function space. The talk concludes with a discussion of related work on algorithm classification and some speculative ideas for topological notions of essential problem structure. |

| Friday 3 Oct |

Dr

Savi Maharaj Lecturer in Computing Science Computing Science and Mathematics University of Stirling |

Using Computers to Model

and Study Human Behaviour - First Steps. The choices

we make and the way we behave have important consequences

both for us as individuals and for society. Computational

techniques can help us both to model and to study human

behaviour through techniques such as agent-based modeling

and virtual experiments. I have been using both of these

approaches in my work. As human behaviour is of course a

huge topic, this talk will not even attempt to cover it

comprehensively, but will focus on two (possibly three)

projects. These involve modelling and investigating

behaviour in the contexts of epidemic spread and

environmental protection policy, and are collaborations

with psychologists and/or economists. The talk will cover

the kinds of models used, the experimental techniques used

to study real human behaviour in order to parameterise the

models, some tentative results, and the difficulties

encountered in inducing (and recognising) realistic

behaviour in virtual experiments. The talk will end with a

speculative discussion of the way forward, with a surprise

bonus contribution from students Craig Docherty and Daniel

Gibbs. |

| Friday 10 Oct |

Alasdair

P Anderson Chief Architect, Big Data for HSBC Group, HSBC BANK PLC |

Data Monetization:

Leveraging Legacy with Big Data. HSBC is a large

international company, with about 250,000 staff and lots

of data (about 65Pbytes across over 7000 systems).

They need a single globalised platform, rapidly, with

advanced analytical capabilities. How can they go about

getting this? |

| Friday 17 Oct |

Dr

Iain J Gallagher Lecturer in Health & Exercise School of Sport University of Stirling |

Big Data Analysis in

Biology & Sport. Big data techniques have been

routinely used in biology for a number of years. These

large data sets are gathered using 'omics' technologies

and allow biologists to examine almost all of the entities

that make cells and tissues function at a particular

moment in time. This informs on important differences in

the molecular details of diseased and normal tissue, in

the processes and pathways active during development or

disease and in the small molecular differences that

predispose us as individuals to disease. Thus as well as

providing mechanistic insight big data in biology is

beginning to allow the stratification of individuals by

disease risk. In sport big data is also becoming more and

more important. Applications include prediction of player

performance and propensity for injury, real time object

detection as well as the analysis of results and betting

patterns (both legal and illegal). In this talk I will

give an overview of big data and the analysis of these

data in both biology and sport. After introducing the

concepts of big data in these domains I will use a 'toy',

but real dataset of Olympic heptathlon results to

demonstrate how dimensional reduction can help us identify

athletic characteristics that could be used for

competitive advantage. I will then give a brief overview

of the 'omics' technologies currently used in biology to

gather large amounts of information – snapshots of

cellular or tissue activity. I will briefly discuss my

tools of choice for big data analysis and the concept of

'reproducible research'. Finally, time permitting I will

present a couple of examples briefly illustrating how a

programmatic approach can inform biological questions. |

| Friday 24 Oct |

Dr Rachel

Lintott Research Assistant Computing Science and Mathematics University of Stirling |

Using Process Algebra to Model Radiation Induced Bystander Effects. Radiation induced bystander effects are secondary effects caused by the production of chemical signals by cells in response to radiation. I will present a Bio-PEPA model which builds on previous modelling work in this field to predict: the surviving fraction of cells in response to radiation, the relative proportion of cell death caused by bystander signalling, the risk of non-lethal damage and the probability of observing bystander signalling for a given dose. This work provides the foundation for modelling bystander effects caused by biologically realistic dose distributions, with implications for cancer therapies. |

| Friday 31 Oct |

Mid Semester | Mid Semester |

| Friday 7 Nov |

Dr

Nanlin Jin Lecturer Computer Science and Digital Technologies Northumbria University, Newcastle |

Smart Meter Data Analytics

by Subgroup Discovery. Based on a recent article.

This talk will cover the following three aspects:

|

| Friday 14 Nov |

Dr Alasdair

Rutherford Lecturer in Quantitative Methods School of Applied Social Science University of Stirling |

Working with and analysing

administrative data from linked health and social care

records in Scotland. Better evidence is

needed on how social care and health care interact to

enable people to live independently in their own homes for

as long as possible. In most countries, individual-level

administrative data on social care, housing support and

health care are collected separately by different

organisations. Linking such datasets could enable service

providers, planners and policy makers to gain an improved

understanding of how these services can work together more

effectively to improve outcomes for patients and service

users. In collaboration with the Scottish Government’s

Health Analytical Services Division and the Information

Services Division of NHS Scotland we have analysed a new

dataset produced as a pilot project which brings together

detailed information on individual social care packages,

prescribing, diagnoses (including dementia and mental

health) and hospital episodes. This enables us to explore

pathways through health and social care from the

individual perspective. This presentation will describe

the building of the dataset, challenges in preparing and

analysing the data, and provide some initial findings from

the study. |

| Friday 21 Nov Room: 4B108 |

Dr Kira

Mourao Research Associate School of Informatics University of Edinburgh |

Learning Relational Action

Models in Robot Worlds. When a robot, dialog

manager or other agent operates autonomously in a

real-world domain, it uses a model of the dynamics of its

domain to plan its actions. Typically, these domain models

are prespecified by a human designer and then used by AI

planners to generate plans. However, creating domain

models is notoriously difficult. Furthermore, to be truly

autonomous, agents must be able to learn their own models

of world dynamics. An alternative therefore is to learn

domain models from observations, either via known

successful plans or through exploration of the world. This

route is also challenging, as agents often do not operate

in a perfect world: both actions and observations may be

unreliable. In this talk I will present a method which, unlike other approaches, can learn from both observed successful plans and from action traces generated by exploration. Importantly, the method is robust in a variety of settings, able to learn useful domain models when observations are noisy and incomplete, or when action effects are noisy or non-deterministic. The approach first builds a classification model to predict the effects of actions, and then derives explicit planning operators from the classifiers. Through a range of experiments using International Planning Competition domains and a real robot domain, I will show that this approach learns accurate domain models suitable for use by standard planners. I also demonstrate that where settings are comparable, the results equal or surpass the performance of state-of-the-art methods. |

| Friday 28 Nov |

John Page Senior Solutions Architect MongoDB Glasgow |

MongoDB, a Big data solution for the real world. This talk will introduce the concepts behind MongoDB - the world's most prevalent and popular big data solution and leading NoSQL Database, explaining what matters when you have a 'Big Data' problem in a real world application and how many of the world's leading companies address this. |

| Friday 5 Dic |

Patricia

Ryser-Welch Department of Electronics The University of York |

Can Plug-and-Play hyper-heuristic help

create state-of-the-art human-readable algorithms?

Hyper-heuristics are defined as heuristics optimising

heuristics. Previous approaches focus on the quality of

the numerical solutions obtained; very little discussion

concentrates on the generated algorithms themselves. Some

hyper-heuristic frameworks tend to be highly constrained

and their architecture prevents the search from

finding state-of-the-art algorithms. Often the

generated algorithms are also not human-readable. We

discuss a new interpretation of hyper-heuristic

techniques, that explicitly and openly offers a flexible

and modular architecture. This together with analysis

of evolved algorithms, could lead to

human-competitive results. |

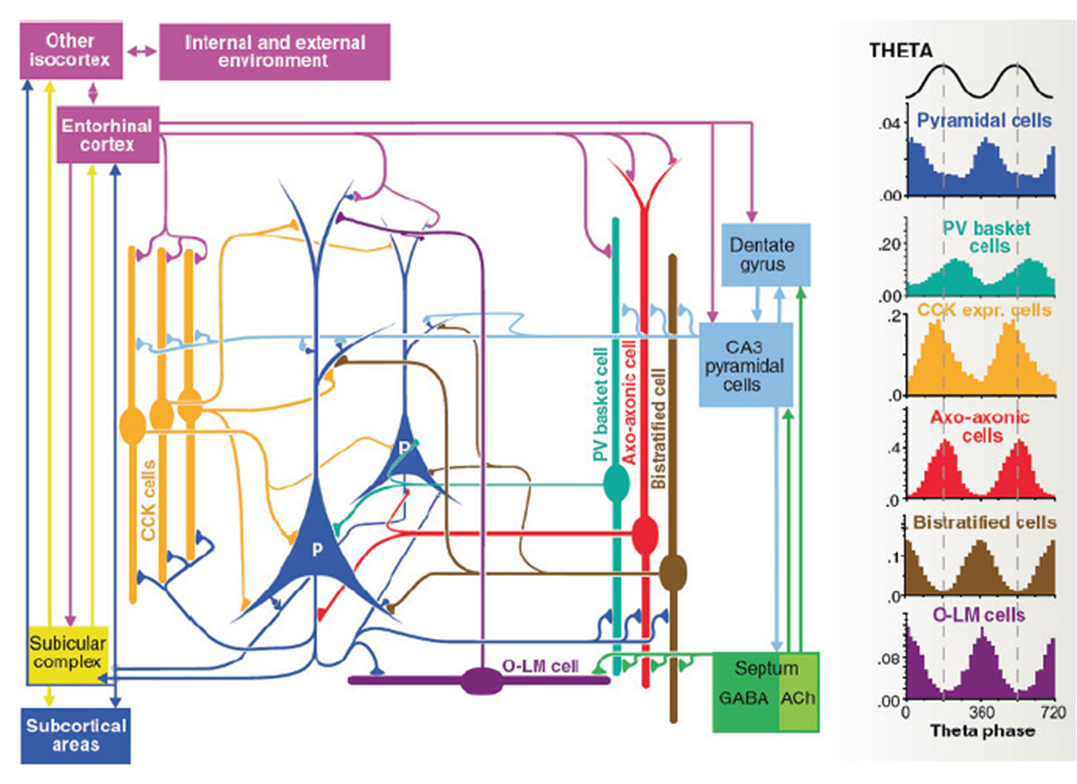

Top image: A simplified view

of the neural circuit of the CA1 region of the mammalian

hippocampus (Fig. 2 pg 284 "Hippocampal Microcircuits"

book, coeditored by Prof. Bruce Graham,

Springer 2010). Encoding and retrieval of patterns of

information in this circuit have been studied by computer

simulation, as detailed in Cutsuridis, Cobb & Graham,

Hippocampus 20:423-446, 2010.

Courtesy of Prof.

Bruce Graham

Last Updated: 25 November 2014.